SMART ROOM: APPLICATION OF IMAGE PROCESSING TECHNOLOGY

If you search for the keyword “smart home” on Google, it will not be surprising to get nearly 1.7 million results. This shows that the research, development as well as the level of interest of people in this type of room is quite large. Smart rooms are becoming necessary not only for medical and welfare purposes, but also in offices and ordinary houses. Moreover, “smart home” does not simply stop at using smart devices but will be easier, more convenient and more flexible with image processing. Obviously, the human-machine interface to convey human intentions is an important part of building smart rooms. This article will show an example of applying image processing to support smart rooms.

Overview of gesture recognition for smart rooms

The essential thing for a human-friendly interface is that the smart room needs to find the operator and understand his/her gestures. So, to make the operations easier and more powerful, we put a CCD camera on the ceiling. We focus on the hand gesture. First, the system finds a person with the CCD camera, then extracts the hand region using color information. Then, the finger regions are extracted from the hand region and the number of fingers is recognized. The hand can be defined as the number of extracted fingers is one and the hand does not move.

Figure 1 - Illustration of a smart room with a CCD camera on the ceiling

Hand Region Extraction

We recognize a person by detecting his/her head. To reduce the computational cost, the hand search region is set from the center of the head region. We convert an image to HSV space to reduce the influence of brightness and extract a hand region in the 2D space of hue and saturation.

Figure 2 - Finger area extraction

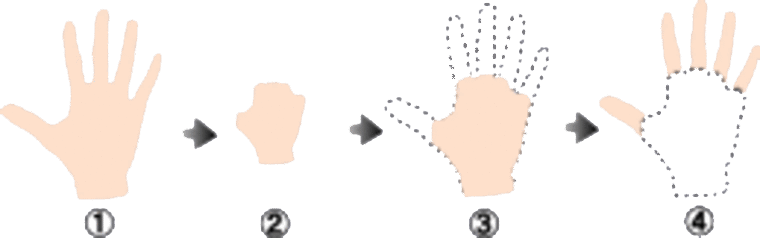

Finger Number Recognition

The number of fingers plays an important role in the intent transfer method. The finger recognition process is illustrated in Figure 3. The extracted hand region (1) is reduced and the finger region is removed (2). Then, this region is expanded (3) to its original size. The difference between the two regions (1) - (3) represents the finger region (4). The number of finger regions is considered as the number of fingers.

Figure 3 - Recognizing the number of fingers

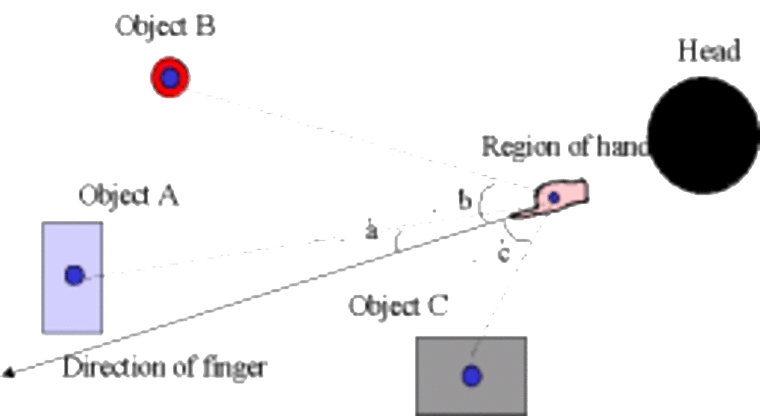

Pointing Recognition

Let us assume that an object is pointed when the number of fingers is one and the hand is stationary. The direction of the main axis of the finger region is taken as the pointing direction. Let us assume that the locations of the target objects in the room are given. Then, the direction for each object from the hand is calculated. The angle between the direction of each object and the obtained direction is calculated. The object with the smallest angle is recognized as a pointed object, if the angle is smaller than some threshold value. For example, object A is recognized as a pointed object in Figure 4.

Figure 4 - Pointer object recognition

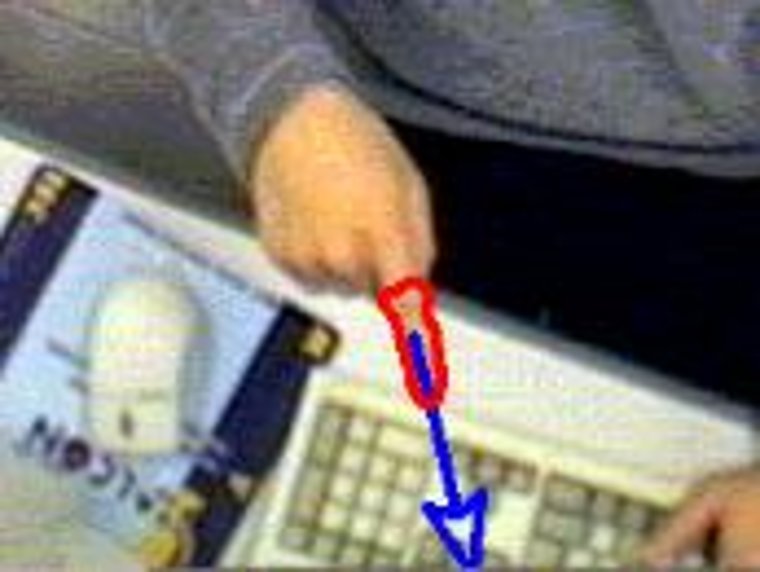

Experiment

Our experimental system consists of a PC (Pentium III 500MHz, Windows NT), a PXC200 image processing board, a ceiling-mounted color CCD camera, and HALCON image processing software. The hand region separation experiment is performed as shown in Figure 5. The head region and the hand region are separated, and the number of fingers is considered as one.

Figure 5 - Example of recognized finger numbers

In our experiments with 200 samples for three objects, the average high finger number (0-5) recognition rate is 96.0% (Figure 5).

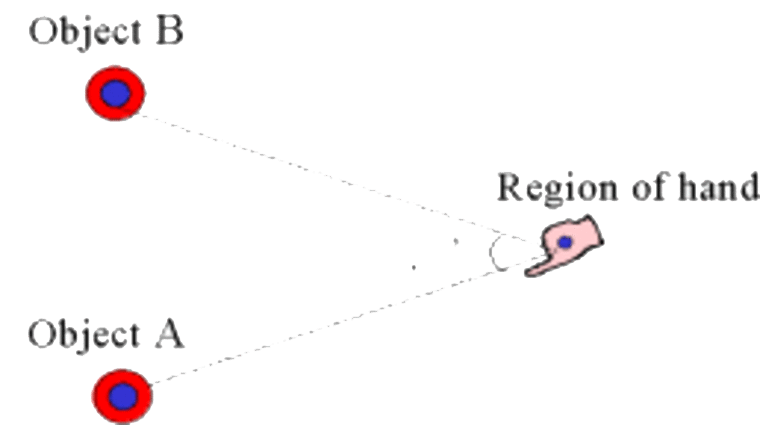

The experiments with two objects are performed for 200 images as shown in Figures 6 and 7. The angle between the objects is set to 60°, 45°, 30° and 15°, and the average recognition rate is 87.1%. When the angle is larger than 30°, the recognition is almost accurate.

Figure 6 - Pointer direction recognition

Figure 7 - Recognized pointer direction

Future Plans

So far, methods for recognizing the number of fingers and the direction of the hand have been developed and evaluated by experiments. In the future, a more practical gesture recognition system for a smart room will be built.

References to the author: Yuichi Nakanishi, Takashi Yamagishi, Professor Kazunori Umeda of Chuo University, Japan